Introduction

The question of how to govern artificial intelligence (AI) is rightfully top of mind for U.S. lawmakers and policymakers alike. Strides in the development of high-powered large language models (LLMs) like ChatGPT/GPT-4o, Claude, Gemini, and Microsoft Copilot have demonstrated the potentially transformative impact that AI could have on society, replete with opportunities and risks. At the same time, international partners in Europe and competitors like China are taking their own steps toward AI governance.1 In the United States and abroad, public analyses and speculation about AI’s potential impact generally lie along a spectrum ranging from utopian at one end—AI as enormously beneficial for society—to dystopian on the other—an existential risk that could lead to the end of humanity—and many nuanced positions in between.

LLMs grabbed public attention in 2023 and sparked concern about existential AI risks, but other models and applications, such as prediction models, natural language processing (NLP) tools, and autonomous navigation systems, could also lead to myriad harms today. Challenges include discriminatory model outputs based on bad or skewed input data, risks from AI-enabled military weapon systems, as well as accidents with AI-enabled autonomous systems.

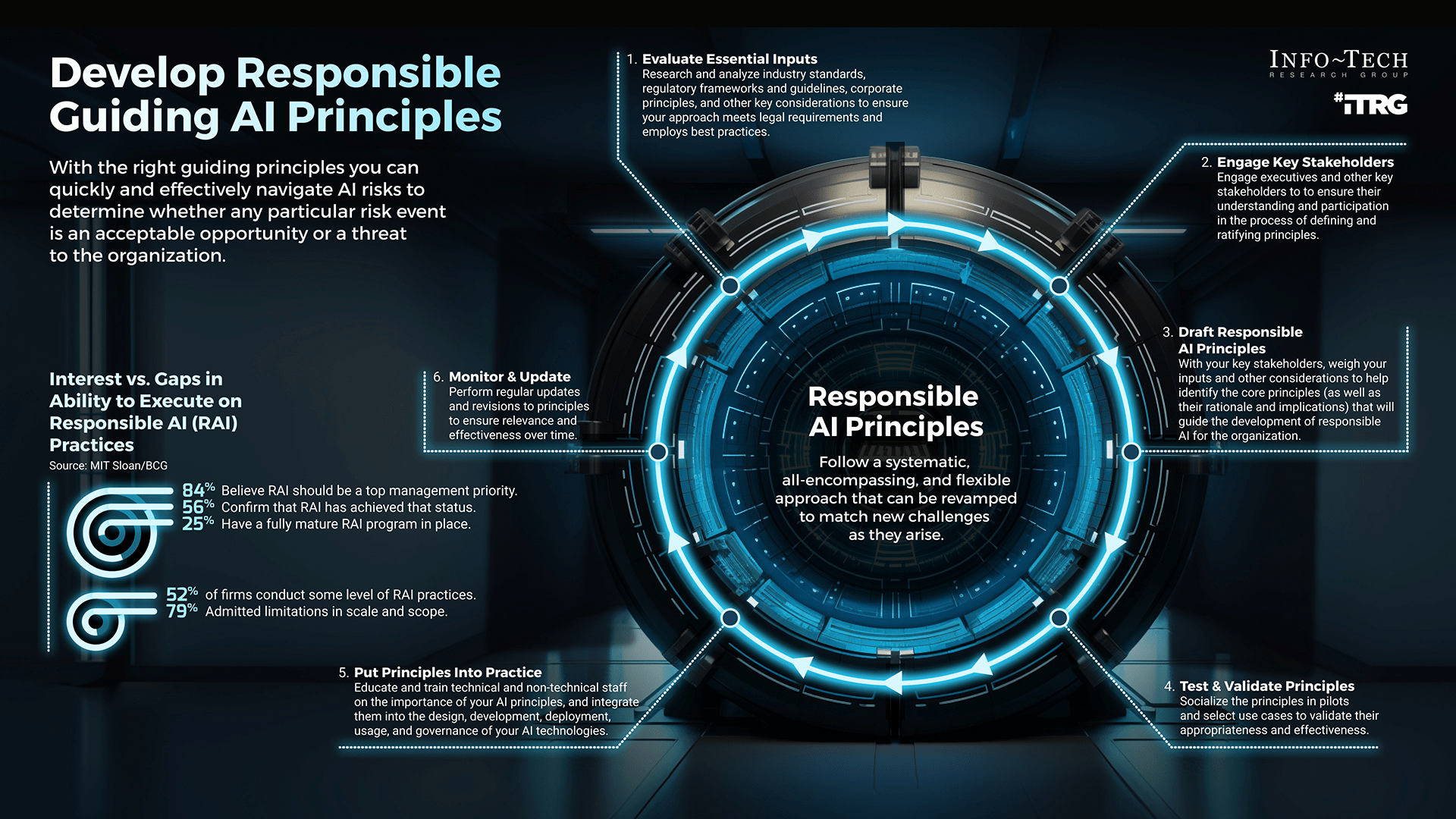

Given AI’s multifaceted potential, in the United States, a flexible approach to AI governance offers the most likely path to success. The different development trajectories, risks, and harms from various AI systems make the prospect of a one-sizefits- all regulatory approach implausible, if not impossible. Regulators should begin to build strength through the heavy lifting of addressing today’s challenges. Even if early regulatory efforts need to be revised regularly, the cycle of repetition and feedback will lead to improved muscle memory, crucial to governing more advanced future systems whose risks are not yet well understood.

President Biden’s October 2023 Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, as well as proposed bipartisan AI regulatory frameworks, have provided useful starting points for establishing a comprehensive approach to AI governance in the United States.2These stand atop existing statements and policies by federal agencies like the U.S. Department of Justice, the Federal Trade Commission, as well as the U.S. Equal Employment Opportunity Commission, among others.3

In order for future AI governance efforts to prove most effective, we offer three principles for U.S. policymakers to follow. We have drawn these thematic principles Center for Security and Emerging Technology | 2 from across CSET’s wide body of original, in-depth research, as well as granular findings and specific recommendations on different aspects of AI, which we cite throughout this report. They are:

- Know the terrain of AI risk and harm: Use incident tracking and horizon-scanning across industry, academia, and the government to understand the extent of AI risks and harms; gather supporting data to inform governance efforts and manage risk.

- Prepare humans to capitalize on AI: Develop AI literacy among policymakers and the public to be aware of AI opportunities, risks, and harms while employing AI applications effectively, responsibly, and lawfully.

- Preserve adaptability and agility: Develop policies that can be updated and adapted as AI evolves, avoiding onerous regulations or regulations that become obsolete with technological progress; ensure that legislation does not allow incumbent AI firms to crowd out new competitors through regulatory capture.

These principles are interlinked and self-reinforcing: continually updating the understanding of the AI landscape will help lawmakers remain agile and responsive to the latest advancements, and inform evolving risk calculations and consensus.

- For Europe, the EU AI Act is the preeminent piece of AI regulation. See: Adam Satariano, “E.U. Agrees on Landmark Artificial Intelligence Rules,” The New York Times, December 8, 2023, https://www.nytimes.com/2023/12/08/technology/eu-ai-act-regulation.html; and Mia Hoffmann, “The EU AI Act: A Primer,” Center for Security and Emerging Technology, September 26, 2023, https://cset.georgetown.edu/article/the-eu-ai-act-a-primer/. See also, “The EU Artificial Intelligence Act: Up-to-Date Developments and Analyses of the EU AI Act.” EU Artificial Intelligence Act, 2024, https://artificialintelligenceact.eu/. For China, see, for example, the CSET translation of, “Regulations for the Promotion of the Development of the Artificial Intelligence Industry in Shanghai Municipality,” the Standing Committee of the 15th Shanghai Municipal People’s, originally published September 23, 2022, https://cset.georgetown.edu/publication/regulations-for-the-promotion-of-the-development-of-the-artificial-intelligence-industry-in-shanghai-municipality/.Footnote Link

- The White House, “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence,” October 30, 2023, https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/; Office of Management and Budget, Executive Office of the President, OMB Memorandum M-24-10, “Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence” (2024), https://www.whitehouse.gov/wp-content/uploads/2024/03/M-24-10-Advancing-Governance-Innovation-and-Risk-Management-for-Agency-Use-of-Artificial-Intelligence.pdf. In September 2023, U.S. Senators Richard Blumenthal (D-CT) and Josh Hawley (RMO), chair and ranking member of the U.S. Senate Subcommittee on Privacy, Technology, and the Law, introduced a Bipartisan Framework for U.S. AI Act. The framework calls for establishing a licensing regime administered by an independent oversight body; ensuring legal accountability for harms caused by AI; promoting transparency; protecting consumers and children; and defending national security amid international competition. See, Senator Richard Blumenthal and Senator Josh Hawley, “Bipartisan Framework for U.S. AI Act,” Senate Subcommittee on Privacy, Technology, and the Law, September 07, 2023, https://www.blumenthal.senate.gov/imo/media/doc/09072023bipartisanaiframework.pdf. Senator Chuck Schumer (D-NY) introduced a high-level SAFE Innovation Framework around AI. The acronym SAFE in Senator Schumer’s framework stands for Security, Accountability, Foundations (in democratic values), and Explain (i.e., “determine what information the federal government needs from AI developers and deployers to be a better steward of the public good, and what information the public needs to know about an AI system, data, or content. See, Senator Chuck Schumer, “Safe Innovation Framework,” 2023, https://www.democrats.senate.gov/imo/media/doc/schumer_ai_framework.pdf.Footnote Link

- Department of Labor, “Joint Statement on Enforcement of Civil Rights, Fair Competition, Consumer Protection, and Equal Opportunity Laws in Automated Systems,” April 2024, https://www.dol.gov/sites/dolgov/files/OFCCP/pdf/Joint-Statement-on-AI.pdf.Footnote Link