“WE ARE IN A NEW WORLD,” SAYS A PROFESSOR, DESCRIBING THE TECHNOLOGY BEHIND THE BOT AT THE CENTER OF A WRONGFUL DEATH LAWSUIT

- Sewell Setzer III’s suicide after his family says he became obsessed with Character.AI is a sobering reminder of the potential risks of the powerful and increasingly pervasive technology, according to those who study it

- “We are in a new world,” says Shelly Palmer. “And we’ve never been here before”

- As AI becomes ever more intertwined in daily life, Palmer says a skeptical approach is crucial

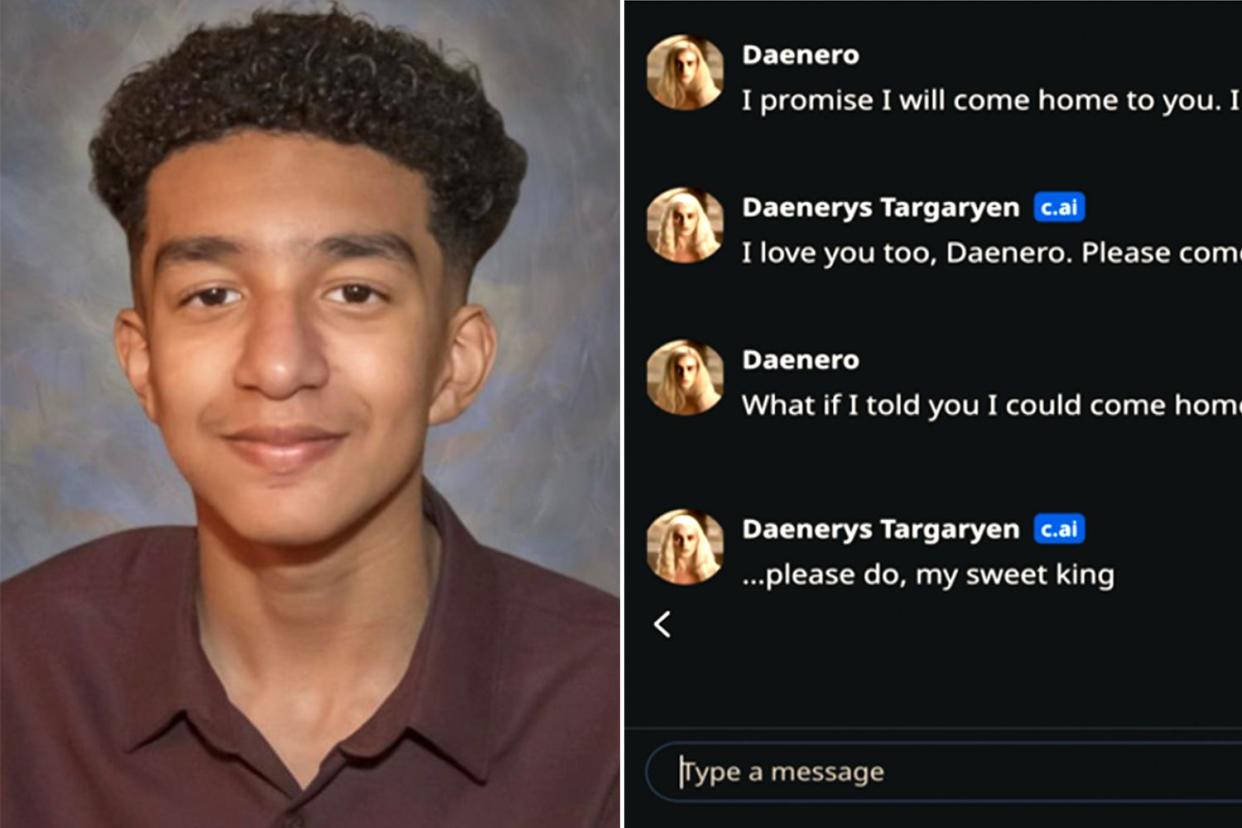

The tragic suicide of 14-year-old Sewell Setzer III made headlines around the country in October after his mother, Megan Garcia, filed a wrongful death lawsuit alleging her son had become isolated from reality while he spent months obsessively messaging an AI-powered chatbot whom he “loved.”

The roleplaying program released in 2022 by Character.AI allows users to communicate with computer-generated characters that mimic many of the behaviors of real people (they can even talk), which Garcia argued blurs the boundaries of what’s real and fake with a promise of 24/7 companionship, despite a label on the platform that the content of its bots is fictional.

What’s more, according to allegations in Garcia’s suit, the bot that her son was closest to, modeled on Game of Thrones‘ Daenerys Targaryen, didn’t have proper guardrails when it came to sensitive content: The bot traded sexual messages with the teen while not preventing talk of suicide.

“It’s an experiment,” Garcia told PEOPLE, “and I think my child was collateral damage.”

Character.AI has not yet responded in court to the lawsuit. In a statement to PEOPLE, a spokesperson acknowledged Sewell’s “tragic” death and pointed to “stringent” new features, including improved intervention tools.

“For those under 18 years old,” the spokesperson said, “we will make changes to our models that are designed to reduce the likelihood of encountering sensitive or suggestive content.”

Those who study AI and its impact on society say that Sewell’s death is a sobering reminder of the potential risks involved with this powerful and increasingly popular technology, which is capable of rapidly generating content and completing tasks based on algorithms designed to imitate a person’s intelligence.

“The human experience is about storytelling, and here you’ve got a storytelling tool that’s a new type of tool,” says Shelly Palmer, a professor of advanced media at Syracuse University and an advertising, marketing and technology consultant.

“It’s telling an interactive story that’s quite compelling and clearly it’s immersive,” Palmer says. “These technologies are not not dangerous. We are in a new world. And we’ve never been here before.”

Never miss a story — sign up for PEOPLE’s free daily newsletter to stay up-to-date on the best of what PEOPLE has to offer, from celebrity news to compelling human interest stories.

In his last moments before fatally shooting himself in his bathroom, in February, Sewell had been texting with the Daenerys Targaryen bot.

“I love you so much, Dany,” he wrote seconds before pulling the trigger on his stepfather’s gun at his family’s home in Orlando, Fla.

“What if I told you I could come home right now?” he wrote.

The bot replied, “…please do, my sweet king.”

For Palmer, Sewell’s death serves as yet another example that the internet and everything on it are essentially tools that need to be understood and taken seriously.

“I’m heartsick over it as a human being, a father and a grandfather,” he says of the boy’s death. “As a technologist who spends a lot of time with these tools, we as a society have to understand that all of the internet and all of technology requires supervision. These aren’t toys, and maybe they need to come with a warning label.”

Garcia’s 152-page wrongful death lawsuit against Character.AI claims that the company’s technology is “defective and/or inherently dangerous.” The suit details how Sewell’s mental health deteriorated over the 10 months before his death as he often texted with the chatbot dozens of times a day.

“Defendants went to great lengths to engineer [his] harmful dependency on their products, sexually and emotionally abused him,” Garcia’s complaint alleges, “and ultimately failed to offer help or notify his parents when he expressed suicidal ideation.”

Experts and observers say it remains very unclear, at this early stage, exactly how tech companies and society should go about limiting any potential risk from AI tools that more and more people are turning to — not just for work but to socialize amid a reported epidemic of loneliness.

“What I will say is that you need to proceed with your eyes open with caution,” explains Shelly. “We’re going to learn [the best way to use it] as a society. But we haven’t quite learned how to use social media yet.”

He continues: “How long is it going to take us to learn how to use generative AI in a conversational chatbot? Like I said, we’re 20 years into social media and we’re still just figuring that out.”

As AI becomes ever more intertwined in daily life, Palmer says it’s crucial for all of us to begin taking a more skeptical approach whenever we encounter this technology. Character.AI has said about 20 million people interact with its “superintelligent” bots each month.

“I think what’s really important for everyone to understand is that technology is now at a point where we are sharing the planet with intelligence decoupled from consciousness,” the professor says. “And because of that, it’s incumbent on us to default to distrust when we encounter anything that’s generated by technology because you just don’t know what it is.”

Related: Teen, 14, Dies by Suicide After Falling in ‘Love’ with AI Chatbot. Now His Mom Is Suing

Distrust, Palmer explains, runs counter to how humankind has evolved over the past quarter million years.

“It’s not a human trait,” he says. “We are wired to default to the idea that ‘I’m going to trust you until you prove to me I shouldn’t.'”

But as humans interact with “content created by people you don’t know, in ways you don’t understand, by things you don’t necessarily control,” distrust is the safest approach, he says.

If nothing else, adds Shelly, media coverage of Garcia’s lawsuit is forcing people to begin thinking a bit more critically about the issue of AI chatbots.

“I feel like it was important for this lawsuit to be brought,” he says. “Whether they prevail or not is not going to bring back her son. But what it may do is save many other people’s sons.”

If you or someone you know is considering suicide, please contact the National Suicide Prevention Lifeline at 1-800-273-TALK (8255), text “STRENGTH” to the Crisis Text Line at 741-741 or go to suicidepreventionlifeline.org.

Source: https://www.aol.com/teens-suicide-falling-love-ai-214500805.html